Mastering Linear Regression: A Simple Approach

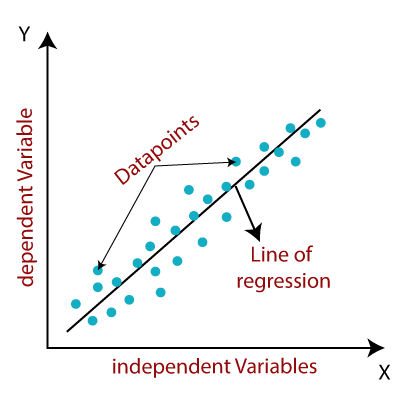

Linear regression is a statistical method used to model the linear relationship between a dependent variable and one or more independent variables.

Why is it called “Linear”?

It is called “Linear” regression because the relationship between the variables is modeled as a linear equation.

An analogy for linear regression might be the relationship between a person’s height and weight. In general, taller people tend to weigh more than shorter people. This relationship can be modeled using a linear equation, where weight is the dependent variable and height is the independent variable.

The equation would take the form:

weight = a * height + bwhere a and b are constants. Using this equation, we can predict a person’s weight based on height or vice versa. For example, if we know that a person is 5 feet tall and the constants in the equation are a=100 and b=50, then we can predict that they weigh 250 pounds (100 * 5 + 50).

How do we decide the parameter values?

In the equation weight = a * height + b, the constants a and b represent the parameters of the model. These parameters are learned from the data using an optimization algorithm. The goal of the optimization algorithm is to find the values of a and b that best fit the data, meaning that they minimize the difference between the predicted values and the actual values.

Some optimization algorithms used for Linear Regression

There are several optimization algorithms that can be used to learn the parameters of a linear regression model, including:

- Gradient descent: This is an iterative algorithm that starts with initial guesses for the parameters and then updates them in the direction that reduces the error of the model.

- Normal equation: This is a closed-form solution that directly computes the optimal values of the parameters using a formula.

- Least squares: This is a method for finding the values of the parameters that minimize the sum of the squared errors between the predicted values and the observed values.

The choice of optimization algorithm will depend on the characteristics of the data and the specific requirements of the application.

How does Gradient Descent work for parameter optimization?

It works by iteratively updating the values of the parameters in the direction that reduces the error. Here’s a general outline of how gradient descent works for linear regression:

- Start with initial guesses for the parameters (a and b in the equation weight = a * height + b).

- Calculate the error of the model using the current parameter values. The error is calculated using a cost function (there are different cost function variations that can be used)

- Calculate the gradient of the error/cost function with respect to the parameters. The gradient is a vector that points in the direction of the greatest increase in the error.

- Update the parameter values by moving in the opposite direction of the gradient. This step is called “stepping” or “taking a step” in the direction opposite the gradient.

- Repeat steps 2–4 until the error of the model is minimized or the change in the parameter values is small enough.

How to calculate the error using the Cost Function? (Step 2)

There are several ways to calculate the error, depending on the specific requirements of the application.

Here are a few common error measures for linear regression:

- Mean squared error (MSE): This is the average of the squared differences between the predicted values and the actual values. The formula for MSE is:

MSE = (1/N) * sum((predictions - targets)²)where N is the number of samples in the training data, predictions are the vector of predicted values, and targets are the vector of observed values.

For example, if you have a training set with three samples and the predicted values are [10, 20, 30] and the actual values are [15, 25, 35], then the MSE would be:

MSE = (1/3) * [(10–15)² + (20–25)² + (30–35)²]

MSE = (1/3) * [25 + 25 + 25] = 50/3

MSE = 16.67The goal of training a linear regression model is to find the values of the parameters (a and b in the equation weight = a * height + b) that minimize the MSE.

- Mean absolute error (MAE): This is the average of the absolute differences between the predicted values and the observed values. The formula for MAE is:

MAE = (1/N) * sum(|predictions - targets|)where N is the number of samples in the training data, predictions are the vector of predicted values, and targets are the vector of observed values.

- Root mean squared error (RMSE): This is the square root of the MSE. The formula for RMSE is:

RMSE = sqrt(MSE)The choice of error measure will depend on the specific requirements of the application. MSE and RMSE are commonly used because they penalize large errors more heavily, which can be useful in applications where large errors are particularly undesirable. MAE is less sensitive to outliers and may be preferred in applications where the distribution of errors is more important than the average error.

In this case, the prediction function would be a simple linear regression model. Mathematically, each prediction value can be obtained using the following function once the parameter values (i.e. a & b) are decided using the gradient descent mechanism.

weight_1 = a * height_1 + b

prediction_1 = weight_1

predictions = [prediction_1, prediction_2, .... ,prediction_n]It would be a list/vector of all individual predictions for each input height.

How to use gradient descent on error/cost function? (Step 3)

The gradient of a cost function is a vector that points in the direction of the greatest increase in the error or cost function. In the context of linear regression, the gradient is used to update the parameter values in the direction that reduces the error of the model.

To calculate the gradient of the error with respect to the parameters (a and b in the equation weight = a * height + b), you need to take the partial derivative of the error with respect to each parameter.

The partial derivative is a measure of how much the error changes when you change one parameter while holding the other parameter constant.

Here’s the general formula for calculating the gradient of the error function (i.e. MSE) with respect to the parameters:

For the parameter a:

gradient_a = (2/N) * sum((predictions - targets) * inputsFor the parameter b:

gradient_b = (2/N) * sum(predictions - targets)Where N is the number of samples in the training data, predictions are the vector of predicted values, targets are the vector of actual values, and inputs are the vector of independent variables (i.e. height in this case).

To update the parameter values using the gradient, you can use the following formula:

- a = a — learning_rate * gradient_a

- b = b — learning_rate * gradient_b

Where learning_rate is a hyperparameter that controls the size of the step taken in the direction opposite the gradient.

It’s worth noting that this is just a general outline of how to calculate the gradient and update the parameter values. There are many variations and details that depend on the specific characteristics of the data and the requirements of the application.

How do we control the learning cycle using gradient? (Step 4)

The size of the step taken in the direction opposite the gradient is controlled by a hyperparameter called the “learning rate”.

A larger learning rate means that the model will take larger steps in each iteration, which can help the model converge faster but can also make it more likely to overshoot the minimum.

A smaller learning rate means that the model will take smaller steps, which can help the model avoid overshooting but can also make it slower to converge.

It’s also worth noting that gradient descent is an iterative algorithm, meaning that it will repeat the above steps multiple times until the parameter values converge to a minimum. The number of iterations can be controlled using a hyperparameter called the maximum number of iterations.

Linear regression can be used to model more complex relationships as well. Still, the basic idea is to find the best-fitting linear equation to describe the relationship between two or more variables.

Overfitting in Linear Regression

In linear regression, overfitting occurs when the model fits the training data too well and does not generalize well to new, unseen data. This can happen if the model has too many parameters relative to the amount of training data, or if the model is overly complex for the underlying structure of the data.

When a model overfits the training data, it tends to have a low error on the training data but a high error on new, unseen data. This is because the model has learned patterns in the training data that are not present in the broader population, and these patterns do not generalize to new data.

Overfitting can be a problem because the goal of a machine learning model is to generalize to new data, not just to fit the training data. If a model overfits the training data, it is likely to perform poorly on new data, which is not what we want.

How to prevent overfitting in Linear Regression?

To prevent overfitting in linear regression, it can be helpful to use techniques such as regularization or cross-validation.

Regularization involves adding a penalty term to the error function that penalizes models with too many parameters or too much complexity.

Cross-validation involves splitting the training data into multiple folds and training the model on different subsets of the data to get a better estimate of its generalization performance.

Variations of Linear Regression

There are several variations of linear regression that are used to address different types of data and modeling goals. Here are a few examples:

- Simple Linear Regression: This is the most basic form of linear regression, which models the relationship between a single independent variable (x) and a dependent variable (y).

y = a * x + bWhere a and b are constants called the parameters of the model

- Multiple Linear Regression: This is an extension of simple linear regression that allows for multiple independent variables. The relationship between the dependent variable (y) and multiple independent variables (x1, x2, …, xn) is modeled as a linear equation of the form:

y = a1 * x1 + a2 * x2 + ... + an * xn + bWhere a1, a2, …, an are constants called the parameters of the model, and b is a constant called the bias term. The goal of multiple linear regression is to find the values of a1, a2, …, an, and b that best fit the data, meaning that they minimize the difference between the predicted values and the actual values.

- Polynomial Regression: This is a form of linear regression that models the relationship between the dependent variable (y) and the independent variable (x) as an n-th degree polynomial equation of the form:

y = a0 * x^n + a1 * x^(n-1) + ... + an * x^1 + b

Where a0, a1, …, an are constants called the parameters of the model, and n is the degree of the polynomial.

It’s worth noting that polynomial regression can be used to model non-linear relationships between variables, but it is still a form of linear regression because the parameters are learned using a linear optimization algorithm. The non-linearity comes from the polynomial terms in the equation, not from the optimization process.

- Ridge Regression: This is a variation of linear regression that adds a regularization term to the error function to prevent overfitting. The regularization term is a penalty on the size of the parameters, which helps to prevent the model from fitting the training data too closely and reduces the risk of overfitting.

The error function for ridge regression is defined as the sum of the squared errors between the predicted values and the observed values, plus a regularization term:

Error = sum((predictions - targets)^2) + alpha * sum(parameters^2)Where alpha is a hyperparameter that controls the strength of the regularization. The predictions will be biased towards the mean of the dependent variable, but this bias can be reduced by increasing the value of alpha, which will increase the strength of the regularization.

- Lasso Regression: Like ridge regression, lasso regression adds a penalty on the size of the parameters, but it uses a different form of regularization that can result in some coefficients being exactly equal to zero. The error function for lasso regression is defined as the sum of the squared errors between the predicted values and the observed values, plus a regularization term:

Error = sum((predictions - targets)^2) + alpha * sum(|parameters|)One key difference between lasso and ridge regression is that the lasso regularization term is the sum of the absolute values of the parameters, whereas the ridge regularization term is the sum of the squared parameters. This means that the lasso regularization will shrink the values of the parameters towards zero, and it can set some of the parameters exactly equal to zero if they are not important for the model. This can be useful for feature selection.

- Elastic Net Regression: Like ridge and lasso regression, elastic net regression adds a penalty on the size of the parameters to prevent overfitting, but it uses a combination of the L1 (lasso) and L2 (ridge) regularization terms. The error function for elastic net regression is defined as the sum of the squared errors between the predicted values and the observed values, plus a regularization term:

l1_penalty = alpha * l1_ratio * sum(|parameters|)

l2_penalty = (1 - alpha * l1_ratio) * sum(parameters^2)

Error = sum((predictions - targets)^2) + l1_penalty + l2_penaltyThe regularization term is a combination of the L1 regularization term (the sum of the absolute values of the parameters) and the L2 regularization term (the sum of the squared parameters). The hyperparameters alpha and l1_ratio control the strength of the regularization and the balance between the L1 and L2 terms, respectively. The larger the value of alpha, the stronger the regularization and the smaller the values of the parameters will be.

Elastic net regression can be useful when there are multiple correlated variables in the data, as it can select a subset of the most important variables and shrink the values of the other variables toward zero. This can be more effective than either ridge or lasso regression alone, as it combines the benefits of both methods.

In general, the difference between these variations of linear regression is the way that they handle the relationship between the dependent and independent variables and the way that they address overfitting. Simple and multiple linear regression models are suitable for modeling linear relationships, while polynomial regression is suitable for modeling non-linear relationships. Ridge, lasso, and elastic net regression are used to address overfitting by adding a regularization term to the error function.

Interview Questions related to Linear Regression

1 — How to choose between MAE or RMSE for a cost function?

The RMSE may be more appropriate if the errors are normally distributed, while the mean absolute error may be more appropriate if the errors are skewed or have a heavy tail.

2 — Why and how to use the R-Squared measure?

It is a measure of the proportion of the variance in the dependent variable that is explained by the model. R-squared is calculated as the ratio of the sum of the squared differences between the predicted values and the mean of the dependent variable to the sum of the squared differences between the observed values and the mean of the dependent variable. Mathematically, it is expressed as:

R-squared = 1 - (sum((targets - predictions)²) / sum((targets - mean(targets))²))Where predictions are the vector of predicted values, targets are the vector of observed values, and mean(targets) is the mean of the observed values.

A value of R-squared close to 1 indicates a good fit, while a value close to 0 indicates a poor fit. It is important to note that it is not a perfect measure and can be misleading in some cases.

For example, adding more independent variables to the model will always increase R-squared, even if the additional variables are not actually useful for predicting the dependent variable. This can lead to overfitting and reduced generalization of new data.

3 — What is the difference between simple linear regression and multiple linear regression?

Simple linear regression models the relationship between a single independent variable and a dependent variable, while multiple linear regression models the relationship between multiple independent variables and a dependent variable.

4 — How does polynomial regression differ from linear regression?

Polynomial regression is a form of linear regression that models the relationship between the dependent variable and the independent variable as an n-th degree polynomial. It can be used to model non-linear relationships, but it is still a form of linear regression because the parameters are learned using a linear optimization algorithm.

5 — What's the difference between Linear Regression and Logistic Regression?

Linear regression is used when the dependent variable is continuous and can take on any value within a certain range. Logistic regression is used when the dependent variable is binary and can only take on two values.

The main difference between linear and logistic regression is the form of the dependent variable and the way the models are evaluated. Linear regression models the dependent variable as a linear function of the independent variables, while logistic regression models the probability of a binary outcome as a function of the independent variables. Linear regression is evaluated using metrics such as the coefficient of determination (R-squared) and the root mean squared error (RMSE), while logistic regression is evaluated using metrics such as classification accuracy and log loss.

6 — Why Logistic Regression is referred to as a regression model?

Despite the fact that the dependent variable in logistic regression is binary, the model is still referred to as a regression because it is used to model the relationship between variables and to make predictions based on this relationship. The term “regression” in this context refers to the fact that the model is used to predict a continuous outcome (the probability of the binary event occurring) based on the values of the independent variables, rather than the specific form of the dependent variable.